Meta has introduced early versions of its newest large language model, Llama 3, along with a real-time image generator that updates images as users input prompts. This move aims to compete with leading generative AI developer, the Microsoft-backed OpenAI.

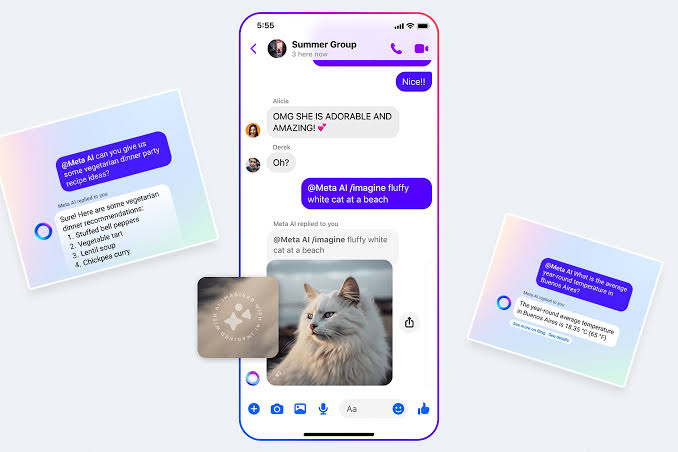

These models will be incorporated into Meta AI, the company’s advanced virtual assistant, which it positions as the most advanced among its free-to-use counterparts. Meta AI will be integrated into Meta’s various platforms like Facebook, Instagram, WhatsApp, and Messenger, as well as a standalone website.

Future versions of Llama 3 will include more advanced capabilities, such as the ability to generate longer multi-step plans and multimodality, enabling the generation of both text and images simultaneously.

Mark Zuckerberg highlighted that the largest version of Llama 3 is currently undergoing training with 400 billion parameters and has achieved a score of 85 MMLU, a metric indicating AI model strength and performance quality. Smaller versions with 8 billion and 70 billion parameters have also been rolled out, scoring around 82 MMLU.

Meta also announced a partnership with Google to include real-time search results in the assistant’s responses, in addition to its existing collaboration with Microsoft’s Bing.

Meta is expanding the availability of Meta AI in English to several countries outside the U.S., including Australia, Canada, Ghana, Jamaica, Malawi, New Zealand, Nigeria, Pakistan, Singapore, South Africa, Uganda, Zambia, and Zimbabwe, with plans for further expansion.

The decision to make Llama 3 open source is a departure from other leading companies like OpenAI and Google, who typically keep their technology closed. Open sourcing allows for easier use, scrutiny, and sharing within the industry, potentially fostering innovation and the development of various apps and tools.